The first conference of Operational Machine Learning: OpML ‘19

I attended OpML ’19 is a conference for “Operational Machine Learning” held at Santa Clara on May 20th.

OpML ‘19

_The 2019 USENIX Conference on Operational Machine Learning (OpML ‘19) will take place on Monday, May 20, 2019, at the…_www.usenix.org

The scope of this conference is varied and seems not to be specified yet, even if I attended it. I’ll borrow the description from the OpML website.

The 2019 USENIX Conference on Operational Machine Learning (OpML ’19) provides a forum for both researchers and industry practitioners to develop and bring impactful research advances and cutting edge solutions to the pervasive challenges of ML production lifecycle management. ML production lifecycle is a necessity for wide-scale adoption and deployment of machine learning and deep learning across industries and for businesses to benefit from the core ML algorithms and research advances.

Overview of the conference

- The number of attendees was 210, they came from LinkedIn, Microsoft, Google, Airbnb, Facebook, etc.

- The target of “Operational Machine Learning” is diverse. I thought it focuses on MLOps things such as reproducibility, ML DSL for productionization, visualization, stakeholder management, but there are many talks about ML for system, system utilization optimization, SRE for ML, hardware accelerator, etc.

- There is a contrast between tech giants, e.g. Google, Uber, Facebook, Airbnb, Microsoft, and LinkedIn, and other followers. While ML lead companies are talking about their OSSs or ML infrastructures, following companies tend to talk about their specific use case or their solutions (those speakers seems to be small ML ventures).

Some interesting talks

Keynote: Ray: A Distributed Framework for Emerging AI Applications

- https://www.usenix.org/conference/opml19/presentation/jordan

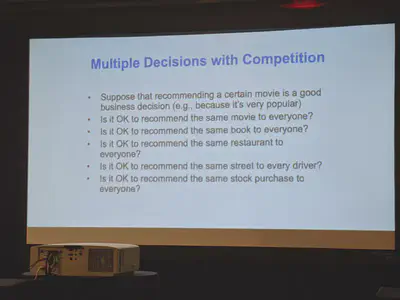

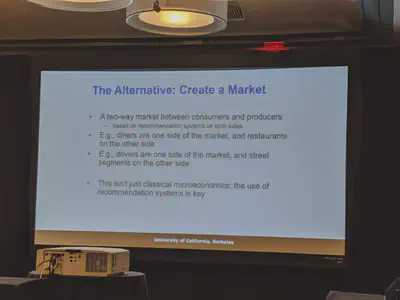

- Current target of Machine Learning is pattern recognition, but Jordan said decision-making will be the future of ML/AI

- Creating a “recommendation market” is the key

MLOp Lifecycle Scheme for Vision-based Inspection Process in Manufacturing

- https://www.usenix.org/conference/opml19/presentation/lim

- https://www.usenix.org/sites/default/files/conference/protected-files/opml19_slides_lim.pdf

- A challenge for defeat recognition by an image in edge applied for Samsung smartphone.

- They need to inference for 3000 GB images/day.

- The team structure which involves product inspectors and product managers is interesting

](/blog/2019-06-04_the-first-conference-of-operational-machine-learning--opml--19-308baad36108/1_5Ab748i-ppe-Lt1DreRiGQ_huc06df4231166899a512c905fd4edcf90_73766_7876a19d46f9c7af0bd458fbdd234c68.webp)

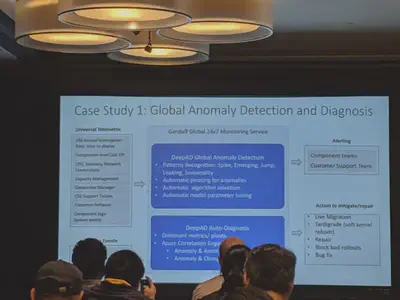

AIOps: Challenges and Experiences in Azure

- https://www.usenix.org/conference/opml19/presentation/li-ze

- Anomaly detection and diagnosis with lambda architecture for Azure

- Disk failure prediction for Azure which introduces proactively live to migrate the workloads to a healthy disk

How the Experts Do It: Production ML at Scale

A panel discussion for ML infrastructures

Lead and moderator: Joel Young, LinkedIn

Panelists:

- Sandhya Ramu, Director, AI SRE, LinkedIn

- Andrew Hoh, Product Manager, ML Infra and Applied ML, AirBNB

- Aditya Kalro, Engineering Manager, AI Infra Services and Platform, Facebook

- Faisal Siddiqi, Engineering Manager, Personalization Infrastructure, Netflix

- Pranav Khaitan, Engineering Manager, Personalization and Dialog ML Infra, Google

The important thing to keep top level is

- the lead time from experiment to production

- Flows build for production with involving different team

- Not everything is the highest priority. Metrics, dashboards are important

Cost of run/train vs Agility

- It’s hard to find down streaming use cases. (Airbnb)

- Monitor model resource usage

- Keep ML infrastructure extremely flexible

- Hard to force using a single framework

What are the important things for your ML platform?

- Reliability

- Scalability

- Developer productivity

- Agility (Available libraries etc)

- Enabling the latest technology

- Cost and impact of Machine Learning

Netflix

- How quickly/many A/B test we can do

- How rapid new researcher can do?

Airbnb

- Business impact

- # of users for the infrastructures

- How many inferences/scoring is done?

- Availability, scalability, cost, and long-term decision making

- Innovation aspect

- How can the ML infrastructure system will empower the next 5 yrs products?

Continuous Training for Production ML in the TensorFlow Extended (TFX) Platform

- https://www.usenix.org/conference/opml19/presentation/baylor

- TFX provides a library for recording and retrieving metadata for ML: ML Metadata https://www.tensorflow.org/tfx/guide/mlmd

](/blog/2019-06-04_the-first-conference-of-operational-machine-learning--opml--19-308baad36108/1_JjjlNJJ7xndhiOSddZv-zA_hu58c4d0a030e9446906d2c166b0d87e2d_70128_8f64f253ed957bceb8d6fba541ebd197.webp)

Disdat: Bundle Data Management for Machine Learning Pipelines

- https://www.usenix.org/conference/opml19/presentation/yocum

- https://github.com/kyocum/disdat

- Talk about OSS for ML pipeline and data versioning.

Predictive Caching@Scale

- https://www.usenix.org/conference/opml19/presentation/janardhan

- Traffic prediction for CDN (Akamai)

- Interesting cache strategy with covering prediction error